🚗 OutVenture: In-Car Educational AR Chatbot System

Date:

As part of the AI Capstone Design Project Course jointly hosted by LG Electronics and Sogang University during Spring 2023, our team developed an in-car educational AR chatbot system called OutVenture. Supervised by Prof. Jeung Uk Ha, this project aimed to enhance the passenger experience—especially for children—by providing real-time information about outside landmarks while driving.

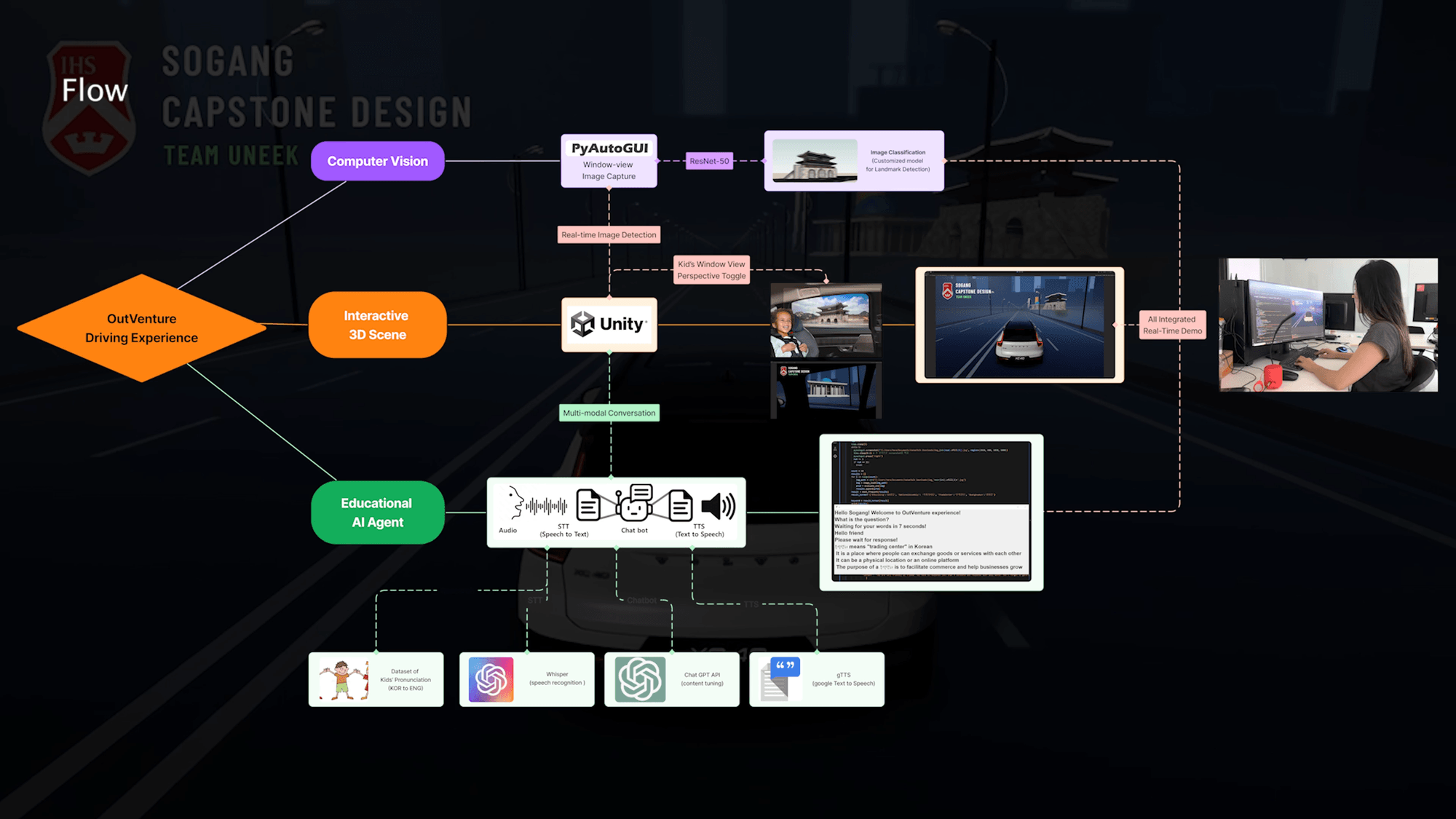

The system was built within a Unity 3D simulation environment, replicating the view from inside a moving vehicle. Using computer vision, it detects and classifies real-world landmarks in Seoul visible through the window. Once a landmark is recognized, a voice-based AI chatbot delivers an educational explanation about it through real-time speech synthesis.

The goal was to create an interactive experience that combines real-time image classification, speech processing, and 3D game simulation into a cohesive AR-assisted learning platform.

We were awarded 3rd Prize (out of 36 teams) in the Capstone Design Project Contest at Sogang University.

🔁 System Flow

▶️ Demo Video

My Contribution

I was primarily responsible for building the image classification model ensuring real-time inference. I used ResNet-18 as a classifier, which was integrated into the Unity environment via PyAutoGUI. To improve both accuracy and efficiency, I extended the model to be multimodal, incorporating not only visual input but also positional metadata such as coordinates and viewing angles from within the Unity simulation.

In addition, I helped design the chatbot integration pipeline, which connected the outputs of the classification model to a voice-based chatbot using external APIs. The chatbot used the model’s predictions to provide real-time, educational explanations about recognized landmarks through speech synthesis.

Team: Sungmin Kang, Hera Kang, Sungjoon Kim, Jisu Park